K-FAC 19 (1900ºF Rated Mineral Wool Board: 1/2" and 1" Thick x 48" x 96" Sheets) - Foundry Service & Supplies, Inc.

SENSOR INDUTIVO MONITOR DE VALVULA Sn:3MM FAC.ALIM.10-30VDC PNP 2xNA CABO 5MTS PVC IP67 NBN3-F31-E8-K PN:047568 PEPPERL

SENSOR INDUTIVO MONITOR VALVULA Sn:3MM FAC.ALIM.8VDC (NAMUR) 2 FIOS 2xNF CAIXA DE BORNE NCN3-F31K-N4-K PN:222680 PEPPERL

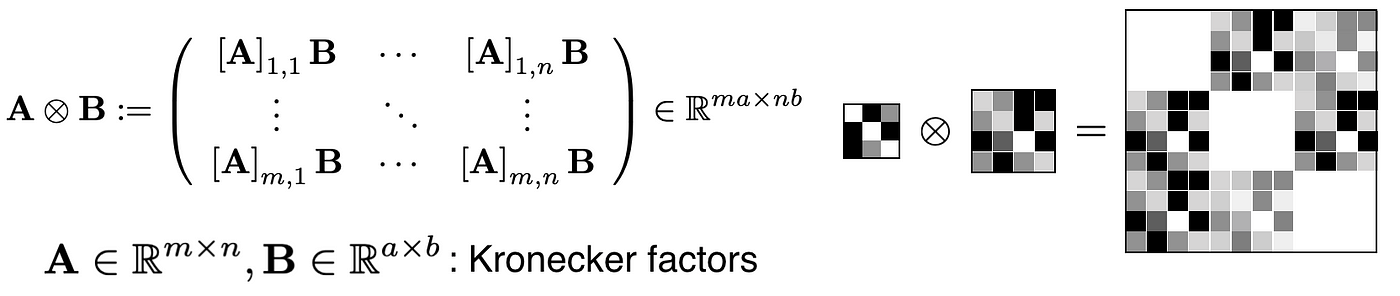

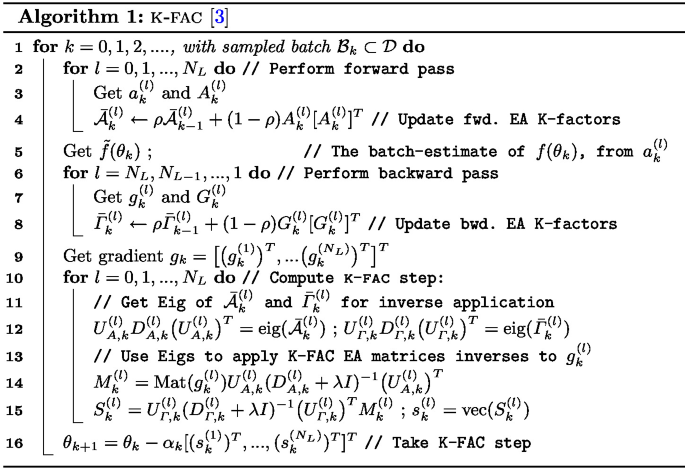

Illustration of the approximation process of KFAC, EKFAC, TKFAC and TEKFAC. | Download Scientific Diagram

GitHub - Thrandis/EKFAC-pytorch: Repository containing Pytorch code for EKFAC and K-FAC perconditioners.